Cosmological Simulations for Sky Surveys

Key Investigators and Authors: Salman Habib (ANL), Jim Ahrens (LANL), Ann Almgren (LBNL), Scott Dodelson (FNAL), Nick Gnedin (FNAL), Katrin Heitmann (ANL), David Higdon (LANL), Peter Nugent (LBNL), Rob Ross (ANL), Anze Slosar (BNL), Risa Wechsler (SLAC), Martin White (UC Berkeley)

NERSC Projects: 1) Cosmological Simulations for Sky Surveys (cosmosim) 2) SciDAC-3: Computation-Driven Discovery for the Dark Universe (cusp) 3) Dark Energy Survey (des, not covered here) 4) Analysis and Serving of Data from Large-Scale Cosmological Simulations (hacc, NERSC Data Pilot project)

Project Description

Overview and Context

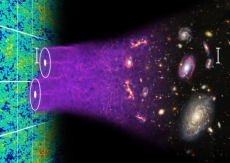

Modern cosmology is one of the most exciting areas in all of physical science. Progress over the last two decades has resulted in cementing a ‘Consensus Cosmology’ that, defined by only half a dozen parameters, is in excellent agreement with a host of cross-validated observations. Although the fact that a simple model can be so successful is already remarkable, three of its key ingredients – dark energy, dark matter, and inflation – point to future breakthrough discoveries in fundamental physics, as all require ingredients beyond the Standard Model of particle physics.

As drivers of progress along the broad front of the Cosmic Frontier research program, large-scale computing and data storage are of central importance. Computational cosmology functions in three key roles:

- Providing the direct means for cosmological discoveries that require a strong connection between theory and observations (‘precision cosmology’);

- As an essential ‘tool of discovery’ in dealing with large datasets generated by complex instruments; and,

- As a source of high-fidelity simulations that are necessary to understand and control systematics, especially astrophysical systematics.

High-end computing and storage are key components of cosmological simulations, which are among the largest computations being carried out today. These can be classified into two types: gravity-only N-body simulations, and ‘hydrodynamic’ simulations that incorporate gas dynamics, sub-grid modeling, and feedback effects. Because gravity dominates on large scales, and dark matter outweighs baryons by roughly a factor of five, gravity-only N-body simulations provide the bedrock on which all other techniques rest. These simulations can accurately describe matter clustering well out into the nonlinear regime, possess a wide dynamic range (Gpc to kpc, allowing coverage of survey-size volumes), have no free parameters, and can reach sub-percent accuracies. Several post-processing strategies exist to incorporate additional physics on top of the basic N-body simulation. Whenever the dynamics of baryons is important, substantially more complex computations are required. ‘Gastrophysics’ is added via either grid-based adaptive mesh refinement (AMR) solvers or via particle-based methods such as smoothed-particle hydrodynamics (SPH). Both classes of simulations require cutting-edge resources, and face limits imposed by the size and performance of even the largest and fastest supercomputers. Because large datasets with ever increasing complexity are routinely created by these simulations, major storage and post-processing requirements are associated with them. These are already at the ~PB level, and are bound to increase steeply with time.

Scientific Objectives in the 2017 Time Frame

The global “project,” which consists of three repositories at NERSC, and simulation and storage allocations at other centers (via ALCC, INCITE, and other awards), is targeted at multiple cosmological probes that are connected to the data stream from large-scale sky surveys, both ongoing and planned for the future. Ongoing surveys include the Baryon Oscillation Spectroscopic Survey (BOSS), the Dark Energy Survey (DES), and the South Pole Telescope (SPT). Future surveys include the Large Synoptic Survey Telescope (LSST) and the Mid-Scale Dark Energy Spectroscopic Instrument (MS-DESI) spectroscopic survey. To a very large extent, the simulation program is tied to the discovery science potential of these surveys. The different cosmic probes are each associated with a major computational campaign, of varying specificity. Below, we list a subset of important probes and associated computational projects that provide a flavor of the challenges ahead, leading on to the specific workplan that would eventually be implemented in 2017.

Baryon acoustic oscillations (BAO) accessed from galaxy surveys [BOSS, DES, MS-DESI, LSST] together provide a precision measurement of the geometry of the Universe at z < 1.6. The challenge here is the ability to run large-volume N-body simulations that can precisely determine the BAO signature in the power spectrum or the corresponding peak structure in the correlation function. At z > 2, the BAO signature can be extracted from the spatial statistics of the quasar Ly-α forest (BOSS, MS-DESI) – a probe of the intervening intergalactic medium (IGM). This requires running large hydrodynamics simulations to model the distribution of neutral hydrogen.

Cluster counts (DES, LSST) provide measurements of both geometry and structure growth. Here, large-volume N-body simulations are required to provide sufficient statistics, and hydrodynamic simulations are necessary to characterize observable-mass relations.

Weak gravitational lensing (DES, LSST) has multiple uses – measurements of geometry, structure growth, and cluster masses. All of these need large N-body and hydrodynamic simulations to accurately predict the mass distribution responsible for the lensing signal.

Redshift-space distortions (BOSS, DES, LSST, MS-DESI) measure the growth of structure and can test theories of modified gravity; these require large-volume N-body simulations to determine and characterize individual galaxy velocities.

Ly-α forest measurements of the matter power spectrum (BOSS, MS-DESI) are sensitive to small length scales and hence to probing the neutrino mass and thermal weakly interacting massive particle (WIMP) mass limits. Properly exploiting this probe demands hydrodynamics simulations with radiative transfer to interpret quasar spectra.

A significant computational task relates to the analysis of large datasets sourced by sky surveys and by simulations. The observational datasets are expected to range from ~1 PB for DES and ~100 PB for LSST, while simulation data generation is constrained only by storage and I/O bandwidth and can potentially produce much larger datasets. Traditional high performance computing platforms are quite unsuited to data-centric computations, and new approaches to scalable data-intensive computing are needed, certainly by 2017, if not much sooner. It is also apparent that managing a complex workflow with very large datasets will be a significant component of computing at the cosmic frontier. Aside from the intrinsic difficulties in theoretical modeling of the individual and collective science cases and dealing with large observational datasets, there is a major added complication: Data analysis in cosmology is in fact a high-dimensional problem of statistical inference where one solves for cosmological and modeling parameters, requiring many solutions of the forward model (predictions for the observations) within a Markov chain Monte Carlo (MCMC) framework. A large number of (de-rated) simulation runs are also needed to determine error covariances. These requirements motivate the development of a new set of fast statistical techniques that at the same time can provide results with small, controlled errors. These sorts of techniques will have become ubiquitous by 2017.

Computational Strategies

Approach

Computational cosmology is not by any means a single computational problem, but rather an interconnected and complex task combining forward predictions, observations, and scientific inference, all within the arena of high performance computing and very large datasets. The primary concern here is with structure formation-based probes; all of these essentially measure – directly or indirectly – the dark matter-dominated density field, or quantities related to it. The success of the overall approach rests on a solid first principles understanding of the basis of structure formation: very close to Gaussian initial fluctuations laid down by inflation (or some other process), to be later amplified by the gravitational instability giving rise to the complex structures observed today (the growth rate of structure is a competition between the attraction of gravity and the expansion of space). In a standard cosmological analysis, this process is fully described by general relativity and atomic physics.

The central computational problem is to generate accurate initial conditions (multi-scale, multi-species, as needed) and then to solve the Vlasov-Poisson equation for the purely gravitating species (dark matter) and include, along with gravity, gasdynamics, feedback, radiative processes, and sub-grid models for the baryonic matter. Because of the conflicting requirements of simulation volume and detailed treatment of small-scale physics, gravity-only N-body codes are used to handle the larger volumes, whereas hydro simulations are run at smaller volumes. One significant simulation task is to build a picture that can consistently include the information from hydro simulations within N-body runs. The data generated by the simulations can be very large and the global analysis task (e.g., constructing synthetic sky catalogs) is itself as complex as carrying out the simulation runs. Thus building the analysis frameworks is also a significant aspect of the overall computational strategy.

Finally, extracting science by combining simulation results and observational data is a separate endeavor, requiring the use of an MCMC framework (and alternatives), where forward predictions must be generated tens to hundreds of thousands of times. The complexity of a single prediction, both in terms of physics and numbers of parameters, obviously precludes brute force simulation runs as a viable approach. To overcome this problem, the ‘Cosmic Calibration Framework’ has been recently developed. The main idea behind the framework is to cover the cosmological and astrophysical model space in an efficient manner by using sophisticated statistical sampling methods and techniques for functional interpolation over high-dimensional spaces. In addition, because the cosmological ‘response surface’ is relatively smooth, and the current observational constraints limit the prior range substantially, the number of required simulations can be brought down into the hundreds, and allows MCMC analyses to be carried out with very fast numerical oracles for cosmic probes, the so-called emulators. Simulation campaigns to generate these emulators will be a key component of the planned work for 2017.

Codes and Algorithms

Large-scale cosmological N-body codes are essential for the success of all future cosmological surveys. As supercomputer architectures evolve in more challenging directions, it is essential to develop a powerful next generation of these codes that can simultaneously avail various types of many-core and heterogeneous architectures. This is the driver behind the development of the HACC (Hardware/Hybrid Accelerated Cosmology Codes) framework. HACC’s multi-algorithmic structure also attacks several weaknesses of conventional particle codes including limited vectorization, indirection, complex data structures, lack of threading, and short interaction lists. It combines MPI with a variety of local programming models (e.g., OpenCL, OpenMP) to readily adapt to different platforms. Currently, HACC is implemented on conventional and Cell/GPU-accelerated clusters, on the Blue Gene architecture, and is running on prototype Intel MIC hardware. HACC is the first, and currently the only large-scale cosmology code suite worldwide that can run at scale (and beyond) on all available supercomputer architectures.

HACC uses a hybrid parallel algorithmic structure, splitting the gravitational force calculation into a specially designed grid-based long/medium range spectral particle-mesh (PM) solver (based on a new high performance parallel 3D FFT, high-order Greens function, super-Lanczos derivatives, and k-space filtering) that is common to all architectures, and an architecture-tunable particle-based short/close-range solver. The grid is responsible for four orders of magnitude of dynamic range, while the particle methods – a blend of direct particle-particle and recursive coordinate bisection (RCB) tree/fast multipole (implemented via the pseudo-particle method) algorithms – handle the critical two orders of magnitude at the shortest scales where particle clustering is maximal and the bulk of the time-stepping computation takes place. Using a benchmark run of 3.6 trillion particles, HACC has demonstrated outstanding performance at close to 14 PFlops/s on the BG/Q (69% of peak) using more than 1.5 million cores and MPI ranks, at a concurrency level of 6.3 million. This is the highest level of performance yet attained by a science code on any computer. Production runs on Hopper with 30 and 68 billion particles have recently been carried out and test runs on Edison will begin soon. HACC development continues in several directions (optimization for Titan, I/O optimization, load balancing improvements, mapping to new architectures). An integrated in situ analysis framework, essential to reduce the I/O and storage workloads, is another HACC feature.

Aside from HACC, we also use Gadget, a public domain code developed primarily by Volker Springel, and TreePM, a similar code developed primarily by Martin White. Both codes use the TreePM algorithm and can scale to ~100K cores in MPI/OpenMP mode.

The collaboration brings together two state of the art cosmological hydrodynamics codes, ART and Nyx. The aim is to use and develop them in synergistic ways, so that they are applied to suit their respective strengths, yet with enough overlap such that results can always be tested with more than one code. The Adaptive Refinement Tree (ART) code is a high-performance cosmology code originally developed in the mid-nineties. Hydrodynamics capabilities were added later, and the code now includes several additional aspects of the physics relevant for the formation of galaxies and clusters. Examples of recent results from ART include an early study of the baryonic effects on weak lensing measurements and a new low-scatter X-ray mass indicator for galaxy clusters. Nyx is a newly developed N-body and gas dynamics code designed to run large problems on tens of thousands of processors. It is based on the BoxLib framework for structured grid adaptive mesh methods, supported as part of the SciDAC FASTMath Institute, and underlies a number of DOE codes in astrophysics and other areas. The use of BoxLib enables Nyx to capitalize on extensive previous efforts for attaining high performance on many processors. The parallelization strategy uses a hierarchical programming approach; excellent weak scaling of the hydrodynamic framework has been demonstrated up to 200K processors. Nyx has been successfully tested using two cosmology code comparison suites.

Both ART and Nyx follow the evolution of dark matter particles gravitationally coupled to a gas using a combination of multi-level particle-mesh and shock-capturing Eulerian methods. High dynamic range is achieved by applying adaptive mesh refinement to both gas dynamics and gravity calculations. The parallelization strategies implemented in ART and Nyx employ both MPI and OpenMP. Multigrid is used to solve the Poisson equation for self-gravity. In both codes, the same mesh structure that is used to update fluid quantities is also used to evolve the particles via the particle-mesh method. However, the two codes differ fundamentally in their approach to adaptivity. ART performs refinements locally on individual cells, and cells are organized in refinement trees, whereas Nyx uses a nested hierarchy of rectangular grids with refinements of the grids in space.

The ART code allows for modeling a wide range of physical processes. Specifically, the current version of the code includes the following physical ingredients (in addition to gravity, dark matter, and gas dynamics): (i) detailed atomic physics of the cosmic plasma, including a novel method for modeling radiative cooling of the gas; gas cooling functions implemented in ART are the most accurate of all existing cosmological codes; (ii) the effects of cosmic radiation on the gas; and (iii) formation of stars and their feedback on the cosmic gas. All this functionality is directly relevant to our science goals. Nyx contains the ‘stubs’ for attaching these types of additional physics packages.

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.